- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- « Previous

-

- 1

- 2

- Next »

WriteDelimitedSpreadsheet corrupts file when trasposing

05-08-2024 05:43 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@altenbach ha escrito:

@antoniobeta wrote:

Thanks all for your responses. @altenbach Thanks for the links I will investigate them.

You all were right, the null characters and the Unicode/ASCII confusion were the reasons I was not getting the results I wanted.

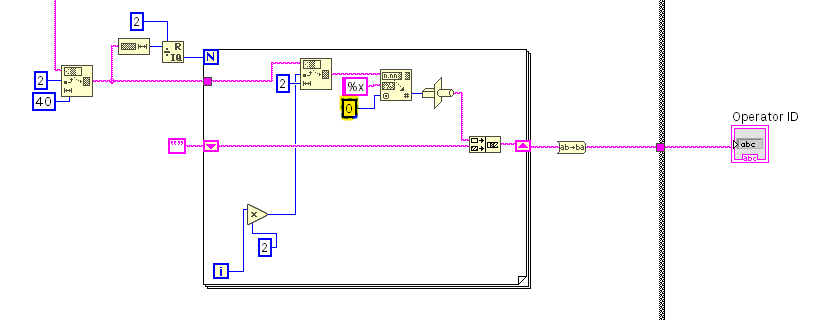

I trace the problem to the part of my code translating the hex data to ASCII. Here on the highlighted constant, I had a U32 type which resulted in a bigger data type which LV filled with 0s. Changing that to U8 resolved the issue.

It is the solution suggested of eliminating the null characters but done on the root of the problem.

Of course you always got the result you wanted and reading the file back with LabVIEW properly would faithfully restore the data later at any time. The ONLY problem was with windows Notepads ability to display the content correctly. A cosmetic issue outside of your control.

Of course whatever you are doing with the code in the picture does NOT translate "HEX data to ASCII" (a very vague term!) for example you seem to be creating binary data from hex formatted strings. Binary data has no business in text files! Period. Human readable text file should not have nonprintable characters except well known delimiters. Why not write the original hex formatted string instead? A string of characters is just that. The ASCII standard just assigns meaning to bit patterns and how they should be interpreted by display devices..

It is possible that I misunderstand your code. Can you attach your VI containing some typical 40 character inputs string? Are you writing that "operator ID" to the text file?

Hi!

I have attached a snippet of my code with the text input you asked. I am receiving an text string that contains the "hex" data and translating that first to numbers (because I am receiving an string) and after that to the string text value "translated to text".

I don’t know if with my last explanation I have made thing worse. I would love to know how to perform those actions in the correct way.

05-08-2024 06:09 AM - edited 05-08-2024 06:10 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hi Antonio,

@antoniobeta wrote:

I would love to know how to perform those actions in the correct way.

Something like this?

(There might be faster ways, but this one should be easy to understand…)

05-08-2024 09:01 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Too bad you attached in LabVIEW 2017. In 3019+ you could just use a map LUT. 😄

(You probably could use variant attributes in older versions, but let's not do that.)

05-08-2024 09:08 AM - edited 05-08-2024 09:17 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

05-08-2024 09:46 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@altenbach ha escrito:

Too bad you attached in LabVIEW 2017. In 3019+ you could just use a map LUT. 😄

(You probably could use variant attributes in older versions, but let's not do that.)

Thats interesting, I cannot find information on why LUT tables are LV2019 and up, do you have some link to share?

05-08-2024 09:50 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@altenbach ha escrito:

Here's what I would do in older versions.

Or

Just to check if I understand, on the first example, you convert the input string to U16 type array because each "hex number" on it is composed of two characters (u8+u8 = u16). After that is just a matter of assigning the u8 array its ascii values. Is that right?

05-08-2024 10:20 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

I use a MAP as LUT. Maps are new in 2019. (see my presentation here, for example). LUTs have been around since ancient times and there are many ways to implement them. A map is useful.

@antoniobeta wrote:

@altenbach ha escrito:

Just to check if I understand, on the first example, you convert the input string to U16 type array because each "hex number" on it is composed of two characters (u8+u8 = u16). After that is just a matter of assigning the u8 array its ascii values. Is that right?

I cast the string to an U16 array, auto-index on the FOR loop, and cast each 2byte value back to the string so we get two bytes at a time.

You need to be careful with terminology! "Convert" as (most tools in the conversion palette) tries to keep the value the same while changing the bit patterns (the bits of the numeric value 10 in U8 is very different than 10 in DBL, but both represent the same value). In contrast, casting tends not to touch the bit patterns, but interprets it as something else, a U16 array in this case. (Typecast is somewhat dangerous if you don't know what you are doing, so make sure to know datatypes and think in binary).

05-09-2024 01:01 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Thanks for the explanation I will try effort an think thoroughly when to use the word convert or cast in the future!

- « Previous

-

- 1

- 2

- Next »