Community Browser

-

NI Community

- Welcome & Announcements

-

Discussion Forums

- Most Active Software Boards

- Most Active Hardware Boards

-

Additional NI Product Boards

- Academic Hardware Products (myDAQ, myRIO)

- Automotive and Embedded Networks

- DAQExpress

- DASYLab

- Digital Multimeters (DMMs) and Precision DC Sources

- Driver Development Kit (DDK)

- Dynamic Signal Acquisition

- FOUNDATION Fieldbus

- High-Speed Digitizers

- Industrial Communications

- IF-RIO

- LabVIEW Communications System Design Suite

- LabVIEW Electrical Power Toolkit

- LabVIEW Embedded

- LabVIEW for LEGO MINDSTORMS and LabVIEW for Education

- LabVIEW MathScript RT Module

- LabVIEW Web UI Builder and Data Dashboard

- MATRIXx

- Hobbyist Toolkit

- Measure

- NI Package Manager (NIPM)

- Phase Matrix Products

- RF Measurement Devices

- SignalExpress

- Signal Generators

- Switch Hardware and Software

- USRP Software Radio

- NI ELVIS

- VeriStand

- NI VideoMASTER and NI AudioMASTER

- VirtualBench

- Volume License Manager and Automated Software Installation

- VXI and VME

- Wireless Sensor Networks

- PAtools

- Special Interest Boards

- Community Documents

- Example Programs

-

User Groups

-

Local User Groups (LUGs)

- Aberdeen LabVIEW User Group (Maryland)

- Advanced LabVIEW User Group Denmark

- ASEAN LabVIEW User Group

- Automated T&M User Group Denmark

- Bangalore LUG (BlrLUG)

- Bay Area LabVIEW User Group

- Bordeaux Atlantique LabVIEW User Group - BATLUG

- British Columbia LabVIEW User Group Community

- Budapest LabVIEW User Group (BudLUG)

- Chicago LabVIEW User Group

- Chennai LUG (CHNLUG)

- Cleveland LabVIEW User Group

- CLUG : Cambridge LabVIEW User Group (UK)

- CSLUG - Central South LabVIEW User Group (UK)

- Dallas Fort Worth (DFW) LabVIEW User Group

- North Dallas User Group Community

- Delhi NCR (NCRLUG)

- Denver - ALARM

- DMC LabVIEW User Group

- DutLUG - Dutch LabVIEW Usergroup

- Egypt NI Chapter

- Gainesville LabVIEW User Group

- GLA Summit - For all LabVIEW and TestStand Enthusiasts!

- GUNS

- Houston LabVIEW User Group

- High Desert LabVIEW User Group

- Highland Rim LabVIEW User Group

- Huntsville Alabama LabVIEW User Group

- Hyderabad LUG (HydLUG)

- LabVIEW-FISICC

- Indian LabVIEW Users Group (IndLUG)

- Ireland LabVIEW User Group Community

- ItalVIEW - Milan, Italy LabVIEW+ Local User Group

- Israel LabVIEW User Group

- LabVIEW GYM

- LabVIEW LATAM

- LabVIEW User Group Nantes

- LabVIEW Team Indonesia

- LabVIEW - University of Applied Sciences Esslingen

- LabVIEW User Group Berlin

- LabVIEW User Group Euregio

- LabVIEW User Group Munich

- LabVIEW Vietnam

- Louisville KY LabView User Group

- London LabVIEW User Group

- Long Island NY LabVIEW User Group

- LUGG - LabVIEW User Group at Goddard

- LUGNuts: LabVIEW User Group for Connecticut

- LUGE - Rhône-Alpes et plus loin

- LUG of Kolkata & East India (EastLUG)

- LVUG Hamburg

- Madrid LabVIEW Local User Group (MadLUG)

- Madison LabVIEW User Group Community

- Mass Compilers

- ANZ (Australia & New Zealand) LabVIEW User Group

- Midlands LabVIEW User Group

- Milwaukee LabVIEW Community

- Minneapolis LabVIEW User Group

- Montreal/Quebec LabVIEW User Group Community - QLUG

- NASA LabVIEW User Group Community

- Nebraska LabVIEW User Community

- New Zealand LabVIEW Users Group

- NI UK and Ireland LabVIEW User Group

- NOBLUG - North Of Britain LabVIEW User Group

- NOCLUG

- NORDLUG Nordic LabVIEW User Group

- Pasadena LabVIEW User Group

- North Oakland County LabVIEW User Group

- Norwegian LabVIEW User Group

- NWUKLUG

- RT LabVIEW User Group

- Orange County LabVIEW Community

- Orlando LabVIEW User Group

- Portland Oregon LabVIEW User Group

- Ottawa and Montréal LabVIEW User Community

- Phoenix LabVIEW User Group (PLUG)

- Politechnika Warszawska

- PolŚl

- Rhein-Main Local User Group (RMLUG)

- Rhein-Ruhr LabVIEW User Group

- Romandie LabVIEW User Group

- Romania LabVIEW Local User Group (RoLUG)

- Rutherford Appleton Laboratory (STFC) - RALLUG

- Serbia LabVIEW User Group

- Sacramento Area LabVIEW User Group

- San Diego LabVIEW Users

- Sheffield LabVIEW User Group

- Silesian LabVIEW User Group (PL)

- South East Michigan LabVIEW User Group

- Southern Ontario LabVIEW User Group Community

- South Sweden LabVIEW User Group

- SoWLUG (UK)

- Space Coast Area LabVIEW User Group

- TU Delft LabVIEW User Group (TUDLUG)

- Stockholm LabVIEW User Group (STHLUG)

- Swiss LabVIEW User Group

- Swiss LabVIEW Embedded User Group

- Sydney User Group

- Top of Utah LabVIEW User Group

- UKTAG – UK Test Automation Group

- Utahns Using TestStand (UUT)

- UVLabVIEW

- VeriStand: Romania Team

- WaFL - Salt Lake City Utah USA

- Washington Community Group

- Western NY LabVIEW User Group

- Western PA LabVIEW Users

- West Sweden LabVIEW User Group

- WPAFB NI User Group

- WUELUG - Würzburg LabVIEW User Group (DE)

- Yorkshire LabVIEW User Group

- Zero Mile LUG of Nagpur (ZMLUG)

- 日本LabVIEWユーザーグループ

- [IDLE] LabVIEW User Group Stuttgart

- [IDLE] ALVIN

- [IDLE] Barcelona LabVIEW Academic User Group

- [IDLE] The Boston LabVIEW User Group Community

- [IDLE] Brazil User Group

- [IDLE] Calgary LabVIEW User Group Community

- [IDLE] CLUG - Charlotte LabVIEW User Group

- [IDLE] Central Texas LabVIEW User Community

- [IDLE] Grupo de Usuarios LabVIEW - Chile

- [IDLE] Indianapolis User Group

- [IDLE] LA LabVIEW User Group

- [IDLE] LabVIEW User Group Kaernten

- [IDLE] LabVIEW User Group Steiermark

- [IDLE] தமிழினி

- Academic & University Groups

-

Special Interest Groups

- Actor Framework

- Biomedical User Group

- Certified LabVIEW Architects (CLAs)

- DIY LabVIEW Crew

- LabVIEW APIs

- LabVIEW Champions

- LabVIEW Development Best Practices

- LabVIEW Web Development

- NI Labs

- NI Linux Real-Time

- NI Tools Network Developer Center

- UI Interest Group

- VI Analyzer Enthusiasts

- [Archive] Multisim Custom Simulation Analyses and Instruments

- [Archive] NI Circuit Design Community

- [Archive] NI VeriStand Add-Ons

- [Archive] Reference Design Portal

- [Archive] Volume License Agreement Community

- 3D Vision

- Continuous Integration

- G#

- GDS(Goop Development Suite)

- GPU Computing

- Hardware Developers Community - NI sbRIO & SOM

- JKI State Machine Objects

- LabVIEW Architects Forum

- LabVIEW Channel Wires

- LabVIEW Cloud Toolkits

- Linux Users

- Unit Testing Group

- Distributed Control & Automation Framework (DCAF)

- User Group Resource Center

- User Group Advisory Council

- LabVIEW FPGA Developer Center

- AR Drone Toolkit for LabVIEW - LVH

- Driver Development Kit (DDK) Programmers

- Hidden Gems in vi.lib

- myRIO Balancing Robot

- ROS for LabVIEW(TM) Software

- LabVIEW Project Providers

- Power Electronics Development Center

- LabVIEW Digest Programming Challenges

- Python and NI

- LabVIEW Automotive Ethernet

- NI Web Technology Lead User Group

- QControl Enthusiasts

- Lab Software

- User Group Leaders Network

- CMC Driver Framework

- JDP Science Tools

- LabVIEW in Finance

- Nonlinear Fitting

- Git User Group

- Test System Security

- Developers Using TestStand

- Online LabVIEW Evaluation 'Office Hours'

- Product Groups

-

Partner Groups

- DQMH Consortium Toolkits

- DATA AHEAD toolkit support

- GCentral

- SAPHIR - Toolkits

- Advanced Plotting Toolkit

- Sound and Vibration

- Next Steps - LabVIEW RIO Evaluation Kit

- Neosoft Technologies

- Coherent Solutions Optical Modules

- BLT for LabVIEW (Build, License, Track)

- Test Systems Strategies Inc (TSSI)

- NSWC Crane LabVIEW User Group

- NAVSEA Test & Measurement User Group

-

Local User Groups (LUGs)

-

Idea Exchange

- Data Acquisition Idea Exchange

- DIAdem Idea Exchange

- LabVIEW Idea Exchange

- LabVIEW FPGA Idea Exchange

- LabVIEW Real-Time Idea Exchange

- LabWindows/CVI Idea Exchange

- Multisim and Ultiboard Idea Exchange

- NI Measurement Studio Idea Exchange

- NI Package Management Idea Exchange

- NI TestStand Idea Exchange

- PXI and Instrumentation Idea Exchange

- Vision Idea Exchange

- Additional NI Software Idea Exchange

- Blogs

- Events & Competitions

- Optimal+

- Regional Communities

- NI Partner Hub

View Ideas...

Labels

-

Analysis & Computation

305 -

Development & API

2 -

Development Tools

1 -

Execution & Performance

1,027 -

Feed management

1 -

HW Connectivity

115 -

Installation & Upgrade

267 -

Networking Communications

183 -

Package creation

1 -

Package distribution

1 -

Third party integration & APIs

289 -

UI & Usability

5,456 -

VeriStand

1

Idea Statuses

- New 3,061

- Under Consideration 4

- In Development 4

- In Beta 0

- Declined 2,639

- Duplicate 711

- Completed 336

- Already Implemented 114

- Archived 0

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

Options

- Mark all as New

- Mark all as Read

- Float this item to the top

- Subscribe

- Bookmark

- Subscribe to RSS Feed

Showing ideas with label HW Connectivity.

Show all ideas

Labels:

Labels:

Labels:

Status:

Declined

Submitted by

Karsten_Dallmeyer

on

12-01-2011

09:07 AM

1

Comment

Karsten_Dallmeyer

on

12-01-2011

09:07 AM

1

Comment

Labels:

- Tags:

- debug

- LabVIEW RT

Status:

New

Submitted by

Brian.Schork

on

08-25-2011

01:13 PM

2

Comment

Brian.Schork

on

08-25-2011

01:13 PM

2

Comment

Labels:

Status:

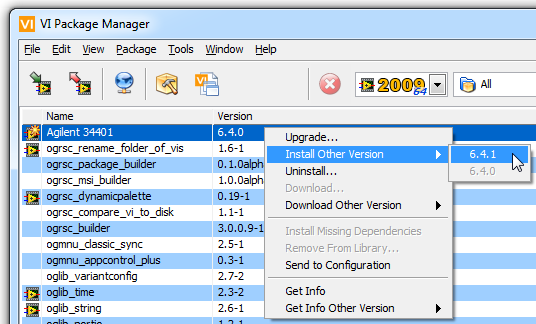

Completed

Submitted by

pjtanz

on

07-08-2011

01:28 PM

13

Comment

Labels:

- Tags:

- build

- dependencies

- Toolkit

Labels:

Labels:

Labels:

Status:

Declined

Submitted by

Mark_Yedinak

Mark_Yedinak

on

04-19-2011

08:19 AM

5

Comment

on

04-19-2011

08:19 AM

5

Comment

Labels: